The famous Delft-made flapping wing drone, Delfly, can now use stereo vision to avoid objects. Dr Sjoerd Tijmons of the Delft micro aerial vehicle lab taught the little dragonfly lookalike to recognise objects and see depth just like humans do.

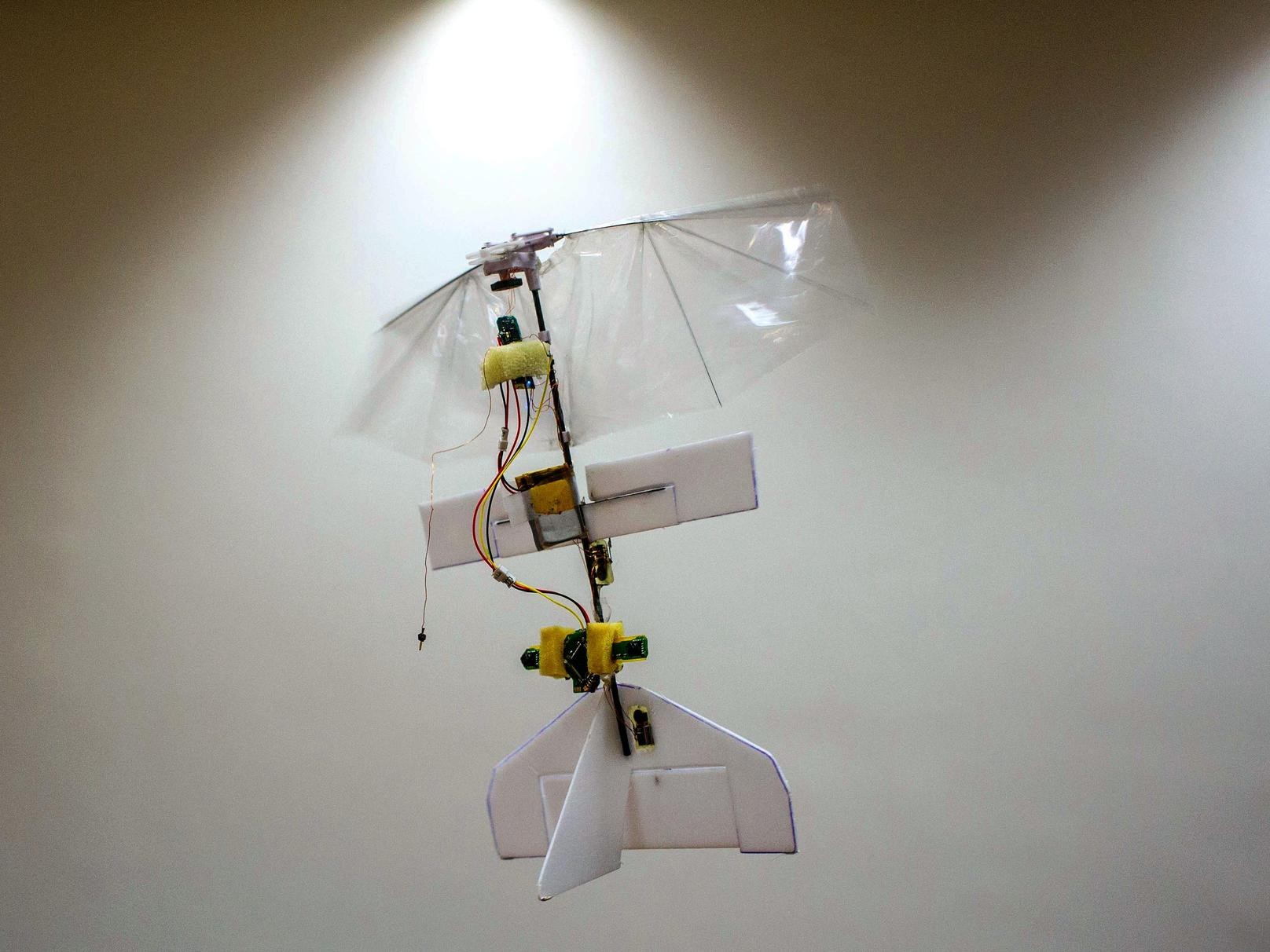

Delfly can now circle around the laboratory avoiding objects and fly autonomously as long as the batteries last. (Photo: MAV Lab)

Small drones that can avoid objects and thus fly semi-autonomously have been around for some years now. But usually they need a ground-based computer to help them navigate. Dr Sjoerd Tijmons taught the Delfly drone to fly all by itself. He defended his PhD thesis, entitled Autonomous Flight of Flapping Wing Micro Air Vehicles, at the Faculty of Aerospace Engineering this week.

Normally, Micro Air Vehicles (MAVs) capture images with their on board camera, send these to the computer on the ground, which does some serious data processing before sending navigational commands back to the drone to prevent it from crashing into objects. This was also the working principle of the flapping wing drone, Delfly, with its 28 centimetre wing span.

Tijmons, however, ‘pimped Delfly up’. He gave it stereo vision. You can switch the Delfly on, letting it take off, and it will circle around the laboratory avoiding objects and fly as long as the batteries last, which is about nine minutes.

The Delfly only weighs twenty grams

Tijmons placed the cameras at a distance of seven centimetres apart, just like human eyes. Combined, they weigh four grams, which is quite a lot. With the cameras on board, the Delfly weighs twenty grams. Add a few more grams, and the drone will not be able to take off anymore. This means that there is hardly any room left for a data processing unit, let alone one that would consume a lot of energy, since battery capacity on the drone is also limited.

“Small and lightweight, MAVs still have very limited autonomous flight capabilities, mainly due to weight restrictions,” the Delft researcher explains. “Since MAVs need to lift their own weight, the sensory and processing devices that can be taken on board is limited. We have to calculate the totals of the size, weight and power (SWaP) of these components to make sure that they fall withing the total available power for flight.”

Selecting images

So, what did Tijmons do to solve this problem? He developed algorithms that help Delfly decide which images to analyse. “I used a very simple processing unit that consumes little energy. The system preselects the images that are relevant for depth analysis. So it gets rid of a lot of unwanted data, or noise as we call it.”

There is still room for improvement. The cameras’ very low resolution sometimes hinder Delfly’s ability to analyse depth. But Tijmons has a plan B for this. He also taught the robot to calculate distance to obstacles by sight. If it bumps into an object, Delfly will remember the shape and other characteristics to avoid crashing into it again.

Since the memory available on the on-board camera system can only store a small number of images, an efficient image description algorithm is used to compress the image data.

“Stereovision is more efficient than depth analysis using sight,” says Tijmons. “But I think the best would be for a flying robot to have both systems.”

Do you have a question or comment about this article?

tomas.vandijk@tudelft.nl

Comments are closed.