The Cognitive Robotics (CoR) lab teaches robots to understand, plan and act. Since its grand opening on 17 April 2024, this is done in this new Mechanical Engineering laboratory where research and experimenting go hand in hand.

The CoR lab was opened on 17 April 2024. (Photo: Thijs van Reeuwijk)

It is a long walk to the far end of Block F in the Mechanical Engineering (ME) building. Blue garlands hang in the large white hall, there are glasses on the table, and a refreshment trolley autonomously drives among the guests. It is one of many robots here: driving robots the size of a breakfast plate or a pedal bin, a dog-like robot from Boston Dynamics, robotic arms and drones.

Group leader Robot Dynamics Professor Martijn Wisse is pleased with the new lab and relieved that, after having been in the planning for five years, it is finally ready. The Department’s growth (120 staff including PhD students and 100 master students per year) necessitated more spacious facilities, although other faculty departments are also experiencing growth. The solution was eventually found in the hall previously occupied by the Process & Energy Lab.

“The Lab buys robots to make them smarter,” explains ME Dean, Professor Fred van Keulen referring to the Cognitive Robotics (CoR) Department. The Department was born out of the partnership of professors from two previous departments: Systems & Control and Biomechanical Engineering. Their mission is to make robots smarter and thus more flexible when interacting with humans. Applications are being developed for healthcare, industry, horticulture and retail – places where humans and robots meet. On the open day, there were eight stands where researchers showed what they are working on.

Taking each other into account

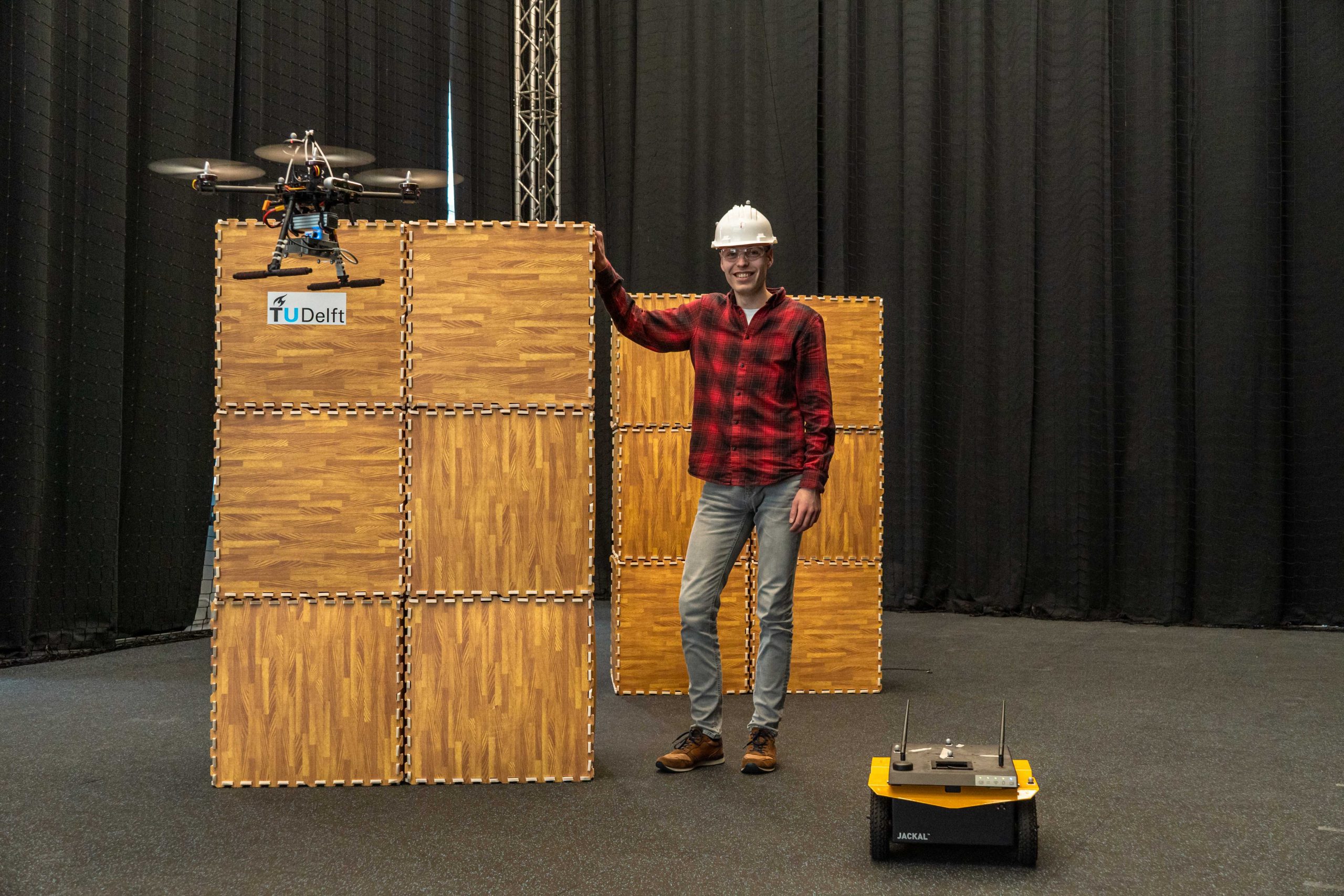

The largest space in the lab is occupied by the Mobile Robotics Lab – a test space where humans, robots and drones mingle. A mesh cordons off the space that measures eight metres by 13 metres by 7.5 metres in height. Twelve infrared cameras generate the images for a three-dimensional survey of all the people in the space. Each object is identified by a unique pattern of pearl-shaped reflectors. This gives the robots information about where someone is standing, what they are looking at, and which way they are likely to go. Just like people watch each other. This is a prerequisite for successful human-robot interaction.

Ride into the future

In the car simulator, the technology also focuses on humans. The scenario is an autonomous car ride – a vision of the future that is getting closer by the day. The question this setup explores is how humans experience being driven by a computer. Do the passengers chill out with their mobile phones, or do they get carsick? An eye tracker monitors where passengers look and physiological measurements record how they feel. Researcher Dr Barys Shyrokau is first working with PhD student Vishrut Jain on a simulation that is as realistic as possible in the Delft Advanced Vehicle Simulator. After that, test subjects can board for a futuristic ride. Toyota is the field partner for this research.

Roboshopper

Orange juice, pasta and a packet of soup. Not exactly a complicated shopping list. But for a robot it is. Where should the robot look, how does it recognise the groceries, and what does it do if someone walks in between or if the packet falls? All eventualities are programmed into this shopping robot designed with field partner Ahold-Delhaize. If a shelf is empty, the robot looks further down the aisle. If a packet fall, it picks a new one. In short, the robot adapts to the cluttered everyday world. The way it learns is also more flexible. Researcher Max Spahn shows how easily the robot arm can be manipulated. An operator can show the robot arm a movement, after which the robot takes over and adjusts the movement according to the height or distance. The idea is to get the robot out of the warehouse and into the shop, but it does have to be smart enough to use.

School robot

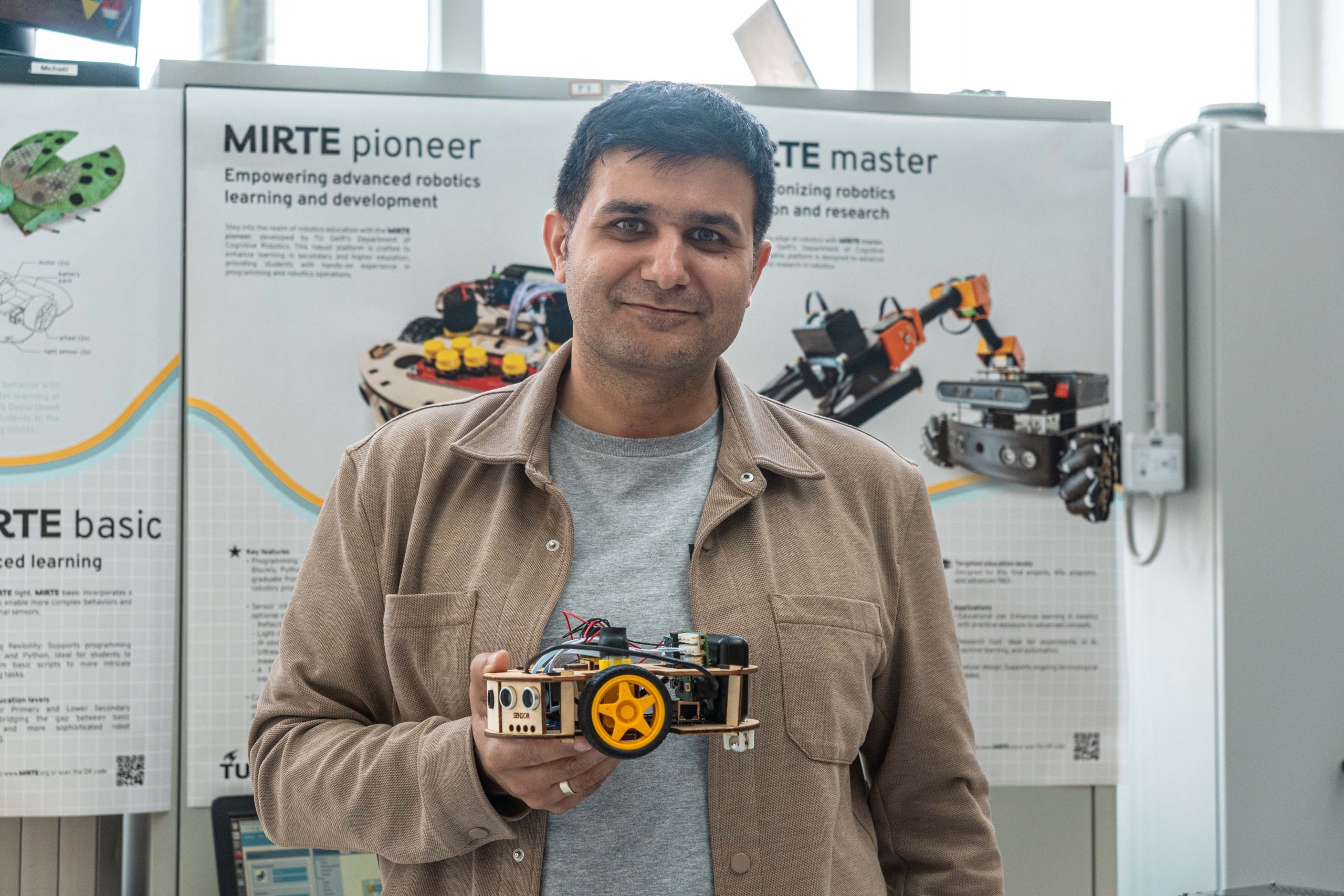

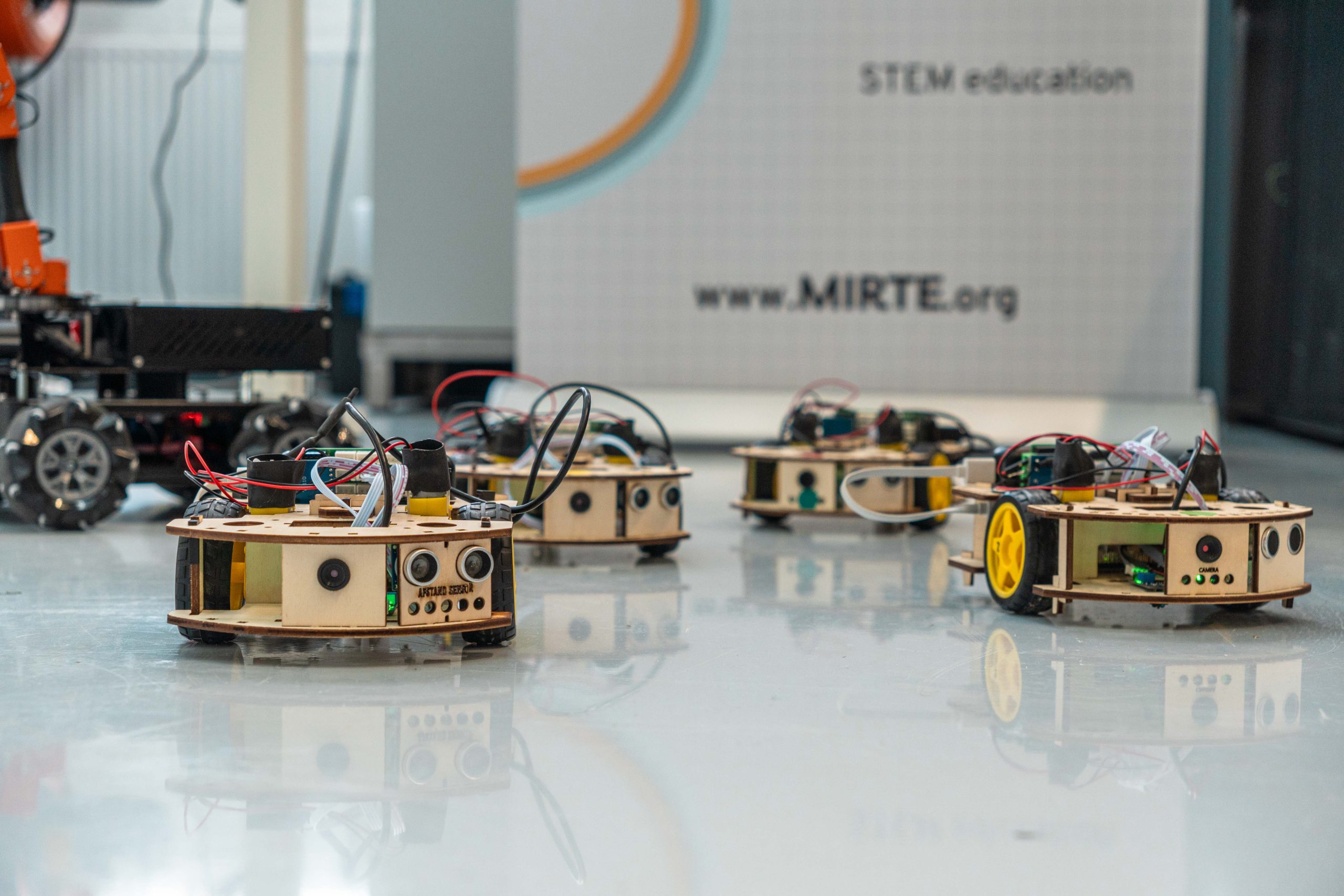

Teacher Martin Klomp developed Mirte, a type of robot that is a suitable learning platform from primary school up to PhD level. The name stands for Mirte, an Inspiring Robot for Technology Education. Mirte contains a small computer the size of a matchbox, a microcontroller to control electric motors, a battery and a number of sensors for light, distance and the surroundings. “In primary school, students use functional blocks to programme. Secondary school students use a programming language like Python, and university students dive into ROS (Robot Operating System, Ed.) codes,” Klomp explains. He and the TU Delft Science Centre jointly organise workshops for secondary schools.

Robot researcher Khaldon Araffa ended up at TU Delft from Syria via Ukraine. He works as a postdoc researcher but is also involved in workshops for children at primary schools and libraries. In Ukraine, he started the Invest in Young Talent initiative in 2006 to give children hands-on experience with robots to better prepare them for the future. He is now continuing his mission with Mirte robots at the European School of The Hague.

Do you have a question or comment about this article?

j.w.wassink@tudelft.nl

Comments are closed.