A chat with an AI system can identify what is bothering you and help with mild symptoms of depression. It does not replace a psychologist, says researcher Dr Franziska Burger.

(Illustration: Auke Herrema)

The third Monday in January is known as Blue Monday. Bleak rainy weather, short days, and stranded New Year’s resolutions. While doing her PhD research at the TU Delft Faculty of Electrical Engineering, Mathematics and Computer Science (EEMCS), Dr Franziska Burger developed an AI system to help with depression.

She had previously trained in cognitive sciences (bachelor in Tübingen University) and in artificial intelligence (master AI Nijmegen University). During her PhD research with Professor Mark Neerincx and Dr Willem-Paul Brinkman (EEMCS), she brought these two tracks together in the design of an AI system that can support cognitive therapy in treating depression.

How did you make that choice?

Franziska Burger explains that “More PhD students suffer from symptoms of depression than the average person. When I started, 30% of all PhD students had symptoms, and Covid only increased this percentage. That’s why we wanted to do something for PhD students, but it has now become broader. The interview technique we use is quite simple. It is the same as is given to patients as homework.”

I understand that it involves a kind of chat with a computer that can figure out what is going on from someone’s answers?

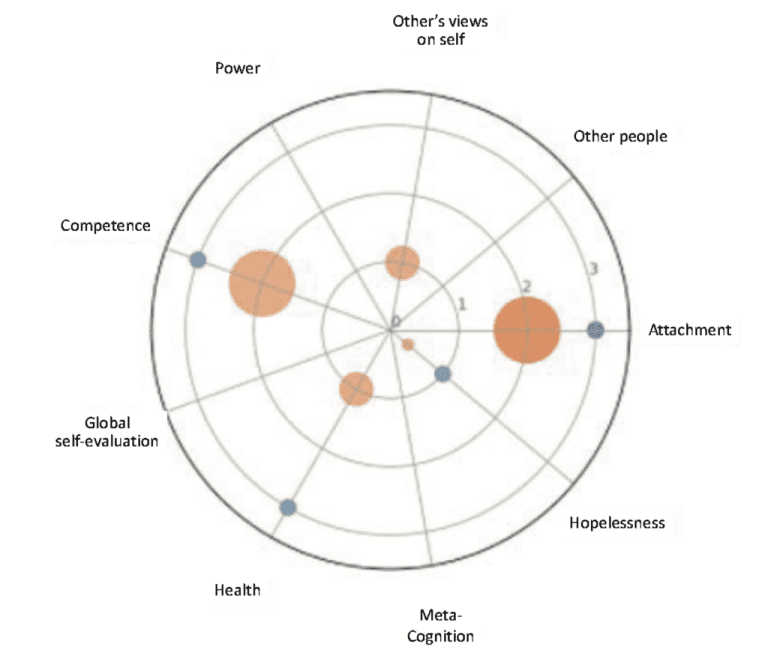

“The intention was to use AI to automatically determine what is going on with someone. That can help the person take a step towards self-knowledge – the beginning of any improvement. Because you repeat the chat sessions after some time, the system becomes more and more certain about what is going on. ‘State the emotion you felt in this situation in one or two words’, it may ask. We show any repeating ‘schemas’ and their intensity on a scale of 0 to 3. This value reflects the certainty of the AI algorithm.”

A ‘schema’ is a particular thought pattern known from psychology as an underlying pattern in symptoms of depression. Many fears and insecurities can be traced back to nine core concepts, or a combination of them: bonding, competence, self-confidence, health, power, self-knowledge, other people, hopelessness, and the opinions of others people. Based on a person’s expressions in a chat, the AI system determines the connection with one or more of these thought patterns and presents them in a kind of radar screen.

The ‘spider plot’ is a kind of radar screen of negative thoughts. Blue dots show the analysis of the most recent chat in the form of related thought patterns (bonding, health and hopelessness). The orange dots show the results of the previous chats. (Image: Franziska Burger)

Does the AI algorithm go beyond identifying underlying schema? For example, will it also come up with remedies or tips?

“We haven’t got that far yet, but it could be a next step. Therapists try to make someone’s thinking pattern positive. We have not taken that step.”

Do you see a role for the AI algorithm in the overburdened mental health system?

“For now, it is at most a supporting role. It is most effective when the AI system supports regular therapy. Therapists often give homework that patients do at home. An AI system can give automatic feedback in this process. This gives the therapist additional information, and the patient gets feedback.”

Can AI make that homework more appealing?

“Though on a website instead, many of the systems used are very similar to a paper version or a self-help book. Do you really gain anything from this? I don’t think so. AI can make a dialogue smarter, more interesting, and more fun through good feedback. That can make a chat a lot more engaging. That’s how I look at it.”

Were psychologists involved in the development of the system?

“We had discussions with cognitive psychologists prior to the study and they put us on the track of schemas. Coders with a background in psychology were involved in coding words to schemas. They labelled the data and we used the labels to train the AI.”

In your introduction, you mentioned the capacity problem in mental health care as a motivation for this project. How do you look at this now?

“You see a shift from regular to preventive mental health care. In preventive care, you have fewer risks because you assume you are working with healthy people. That’s where you can use AI because the risks are small, and the algorithm can help people gain self-knowledge if you develop and test it responsibly.”

And for patients with depression?

“I think AI can be used responsibly for patients with depression, but only if the AI is well understood. It would also be useful if a therapist is involved to help interpret and discuss what the AI is spitting out. I would not deploy a standalone AI-based chat system in cases of severe depression. The model we have developed now is too basic and the risks are too high.”

So, the psychologist cannot yet be replaced by an AI system?

“No, and that is not our goal either. Our work is more about collaboration than replacement.”

- Download an example chat between the AI system and a volunteer from the field test.

- Franziska Burger, Supporting Electronic Mental Health with Artificial Intelligence, download the PhD thesis

Do you have a question or comment about this article?

j.w.wassink@tudelft.nl

Comments are closed.